Marine Robotics Group

MIT Computer Science and Artificial Intelligence Lab

Our Research

Our research is fundamentally centered around the task of navigation for mobile robots in unknown environments. This is referred to as simultaneous localization and mapping, or SLAM, where a robot is tasked with building a map and concurrently situating itself within that map to achieve its mission.

Incredible progress has been made in this field in the last 25 years. However, significant challenges still exist, such as operating in challenging environments (such as underwater), dealing with dynamics in the world, and scalabilty to larger maps and longer time frames.

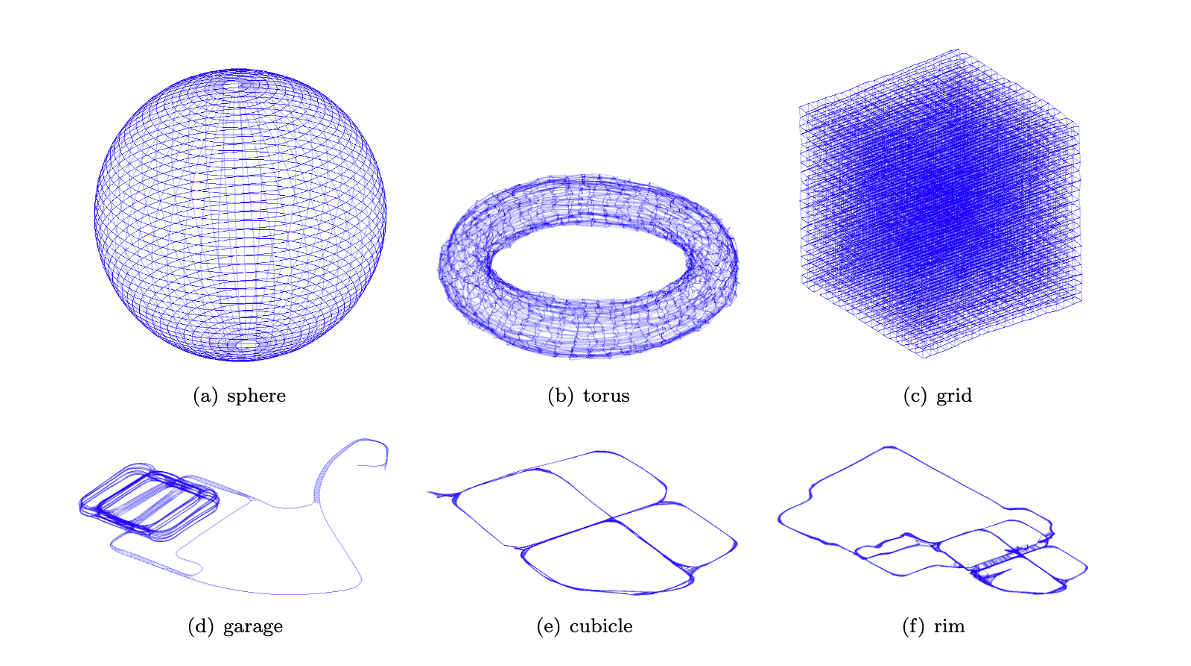

Our research focuses primarily on three themes which relate to the implementation of SLAM across multiple environments. As our name suggests, we have a strong interest in Marine Robotics where we address the challenges of localization and mapping in the underwater environment. Under our Theoretical Foundations theme we tackle fundamental problems in SLAM that are applicable across domains such as robustness, scalability, consistency, map representation and others. New commodity RGB-D cameras give coregistered color and depth imagery. Our work in this Object-based mapping area focuses on object-based representations and how these can be used to aid localization.

In collaborations and we have worked on SLAM in space as part of the SPHERES/Vertigo project and have implemented SLAM for development with Agile Robotics.

We have also participated in robotic competitions. The DARPA Challenges were created and funded by the Defense Advanced Research Projects Agency (DARPA), he most prominent research organization of the United States Department of Defense, to stimulate advancements in the development of the first fully autonomous ground vehicle. In 2007, the goal of the DARPA Urban Challenge was to complete a 60 mile urban area course in under 6 hours. MIT achieved 4th place . More recently, RobotX Competition was funded by the Association for Unmanned Vehicle Systems International (AUVSI) to challenge teams to develop new strategies for tackling unique and important problems in marine robotics. The joint team from MIT and Olin College was chosen as one of 15 competing teams from five nations (USA, South Korea, Japan, Singapore and Australia) and took home first prize.

Marine Robotics

Cooperative AUV Navigation

Related Publications

Object-Based Representations for Navigation

Progress in machine learning, especially in computer vision, has revolutionized robot perception. We now expect robots not only to comfortably navigate in the world, but to extract and make use of semantic cues to perform everyday tasks. Despite the recent progress in learning-based perception methods, a number of challenges remain to bridge the gap between the often "static" conditions and pre-processed data used to construct datasets for tasks like object detection and recognition and the open set, dynamic conditions in which we'd like to deploy robots.

Robust Data Association for Semantic SLAM

A critical challenge in coupling learned perception models like object detectors into a navigation framework is dealing with errors. False positives and misclassification errors that occur even in nominal, non-adversarial operating conditions may be minor for offline machine learning tasks, but can cause critical failures if they trigger bad loop closures during navigation. In the lifelong setting (as "time goes to infinity") we cannot expect these problems to disappear entirely, even as learned models improve. Our research in this area is focused on developing robust models with efficient representations that can cope with the potential errors caused by failures of an object detector, combining individual (plausibly corrupted) measurements into a globally coherent model.

Related Publications

- K. Doherty, D. Baxter, E. Schneeweiss, and J. Leonard, “Probabilistic Data Association via Mixture Models for Robust Semantic SLAM,” in IEEE Intl. Conf. on Robotics and Automation (ICRA), 2020.

- K. Doherty, D. Fourie, and J. Leonard, “Multimodal Semantic SLAM with Probabilistic Data Association,” in IEEE Intl. Conf. on Robotics and Automation (ICRA), 2019, pp. 2419–2425.

Theoretical Foundations

Certifiably-Correct SLAM